God is not a bird

Why AGI & ASI are still far away

I. ✈️

Flying is crazy.

The simple fact that we can step inside a large metal box and that box proceeds to soar thousands of miles in the sky, keeping us safely in our seats as it flies us for a couple of hours till we reach our destination - the fact that we, as humans, can literally FLY from one place to another - is nothing short of a miracle.

But that isn't the crazy part.

The crazy part of flying, of aeroplanes specifically, is that our planes don't have flapping wings.

By all means, our species should've looked at the skies, the domain that was unconquerable for centuries, the land of birds and clouds and starts, and our species should have decided to accurately model a bird & build a mechanical bird in order for us to fly.

And that's exactly what they did, at first.

This was Leonardo Da Vinci’s attempt at creating a plane in 1505. It didn’t work.

Others such as Clement Adler tried with steam engines inside bird-like structures in 1897, which also, failed -

It wasn't until the Wright brothers came along, who finally built a working prototype and humanity was able to touch the skies.

This first plane, a marvel of engineering, has principles which today's planes still use! For instance, the method of controlling the aircraft's direction and altitude through the manipulation of fixed wings rather than mimicking the flapping motion of birds. Even the three axis control system and the horizontal stabilizer are some of the things that modern planes still use - even if the mechanisms have evolved (for example - the Wright brothers placed the horizontal stabilizer at the front of the plane, while in modern planes it’s usually at the tail).

Now, if the Wright brothers had decided to instead model their plane over something that closely resembled a bird like everyone else was doing and failing at - for centuries, would we still have human flight?

Unless someone else came along and built a similar plane, that answer is no. We would've been confined to the land and seas, dreaming of one day soaring the skies with our bird-shaped planes that don't quite work.

I believe we're at a similar point in history with AI.

Despite the marvellous rate of progress, despite the current hype that was decades in the making, despite the billions and trillions(?) in funding, the fact that we finally have machines who can talk - I don't think we can legibly get to an AGI or ASI - a God that rises from the machines and solves all our problems, with the current stack.

Why?

Because we’re doing the same that people before the Wright brothers were trying to do - taking inspiration from what exists physically and naturally, and accurately trying to build a version of that.

But unfortunately, God is not a bird.

Let me explain.

II. 🧠

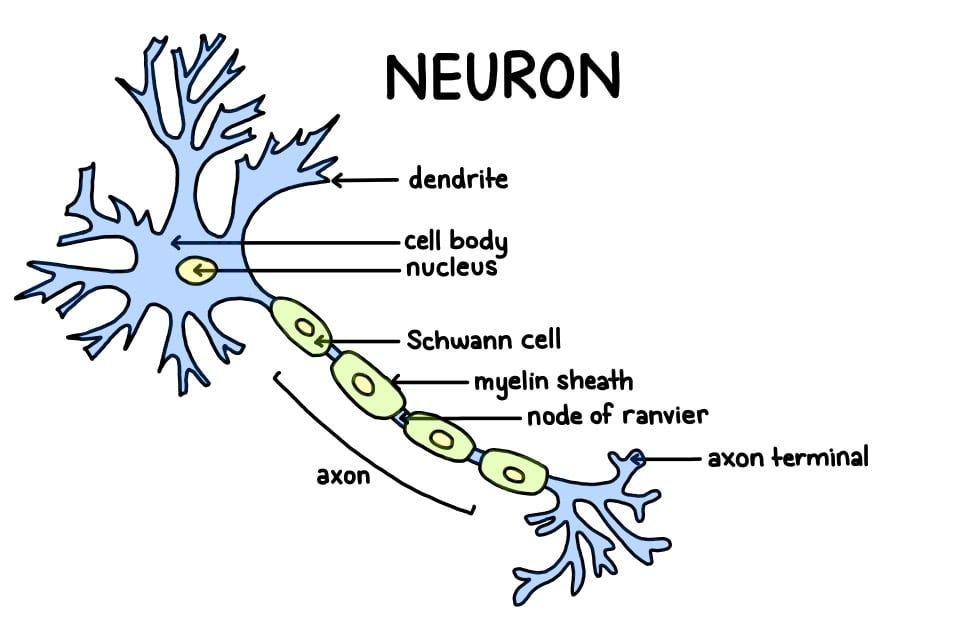

This is a neuron.

You brain has over 100 Billion of them. It is believed that the long spiky bits on the left gets inputs, and the passes it along (as an output) to other neurons. In neural networks, the “neuron” does the same thing - takes some inputs - gives an output.

That’s the best way I can describe this without this becoming a computational biology essay. But here’s some history to understand the big picture.

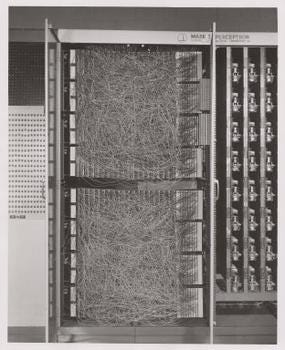

In 1958, Frank Rosenblatt invented the Mark 1 Perceptron, an early neural network, at the Cornell Aeronautical Laboratory. It looked like this -

And it was built to accurately mimic the way our neurons work -

You don’t have to understand the above diagram. Just notice the two headings on the diagram - organization of a biological brain, organization of a perceptron - and these two headings paint the whole picture of what deep learning is still trying to do, almost 70 years later.

When the perceptron failed, further improvements like the ability to train multi-layer networks - where there are multiple layers of neurons between an input and output, came about. This was when algorithms like Backpropagation (Yes, the same backpropagation algorithm that’s the bedrock of all LLMs today) was invented in the 1980s - which made it easier to train neural networks of multiple layers - called “deep” neural networks - because there were multiple layers between the input and output.

Deep learning still didn’t take off - because we didn’t have enough compute and enough digital data back then.

Since then, many algorithms like Recurrent Neural Networks, Reinforcement Learning and Convolutional Neural Network continued to be invented, even though they weren’t completely different from what we used to have.

Recurrent Neural Networks, for example, is just some neurons in a neural network isolated to loop back and give the outputs back to the inputs before giving us an output - an attempt at mimicking the state of internal thoughts that humans have.

In a nutshell, all each neuron does in a neural network is two things - predicts something & measures that prediction against the result.

Just like a human learns from its mistakes, it keeps adjusting and “learning” till it gets things right.

So, it’s just good ol’ prediction & learning -

Prediction: Each neuron in a neural network receives inputs, which it processes using its own set of weights (parameters that determine the importance of inputs) and a bias (a parameter that allows the neuron to adjust its output independently of its inputs). The neuron applies a function (often non-linear, like ReLU or sigmoid) to the weighted sum of its inputs and the bias. This function's output can be thought of as the neuron's prediction, which it sends forward to other neurons.

Error Measurement and Adjustment (Learning): The network’s final output is compared to the desired output, and the difference between them is quantified as an error or loss. This error is then used in backpropagation, where it is propagated back through the network. During this process, the contributions of each neuron to the overall error are estimated, and their weights are adjusted to reduce the error. This step is analogous to a human learning from mistakes, as the network tweaks its parameters slightly to improve performance in future predictions.

If the above descriptions don’t make much sense, in the words of Andrew Ng - don’t worry about it.

What is important to understand is that we figured out a couple of decades ago that our neurons receive an input, and produces an output. That’s easy to build!

Every computer can already do that! But wait - the output has to be correct! Which means that we need some way to get the output back in the input after a measurement of whether the output was correct or not in the first step.

Repeat the above process a couple of times - and the errors start getting lower - obviously, as every step of the way you’re estimating how wrong you are and correcting for that.

Repeat it billions or trillions of times, and the errors start disappearing. Now, your “model” is ready. It’s time to throw away your training data (the data that allowed your model to know what it was getting correct and what it was getting wrong), and check your neural network against a validation set (data it hasn’t seen before). During this step, you get a series of measurements as to how accurate your model is. If it passes with flying colors, you can send it out into the world.

Whether it’s a small model like a binary classifier which identifies pictures of cats from dogs, to large language models like GPT-4, everything in AI that’s “HOT” right now, boils down to these simple steps.

Of course, I’m oversimplifying a bit - there’s a lot of beautiful math & other elegant algorithms that makes all this possible, but at its core, this is all it is. And it’s magical when you look at models this way.

We’ve effectively managed to make our machines capable of the actions such as “seeing” and “learning” and “talking”, and it’s absolutely amazing that we’ve managed to do this just by trying to replicate what goes on in our heads.

But is it enough?

III. 🤖

Deep learning has long been criticized for “hitting walls” but it continues to prove critics wrong. I’m not a critic. I love the progress that deep learning continues to show, but I’m not a believer that it will lead to AGI. In a way, I’m optimistic that we’ll find better ways.

But the world does not think this right now.

Capital continues to flow into AI. There are large factions of people regularly protesting that AIs will get so powerful that it’ll take over the world and we should stop building them instead. Elsewhere, governments are trying to come up with regulations. Institutes dedicated to “AI safety” are fear mongering and some are even calling for data centers to be bombed. AI companies, smart as they are, are warning us all of the dangers of open sourcing AI - bringing up arguments like -”You wouldn’t open source a nuclear weapon, will you?” all the while saying, probably, to themselves, “It’s called we do a little thing called regulatory capture”.

As the amount of continue to grow, and large language models continue to scale, there’s apparently no end in sight as to how capable future models can be.

Every year, we get more data to train our models on. There are models who are now producing “synthetic data”, or data that is completely AI generated, on a wide range of topics to feed into the training data for future models.

GPT-6 might need a large power plant data center to train on. GPT-7, if we assume the scales and architecture to not fundamentally change, might need a large city-sized data center. Getting to GPT-7 might be tough if GPT-6 doesn’t feel like an AGI already, but even if it is not, and smart, well intentioned people believe that GPT-7 will get us there, then even bringing out GPT-7 is worth it.

But somewhere along the way, with trillions of dollars of capital on the line, one must ask themselves if this is truly going to work - or whether it’s a good idea to explore alternate paths.

Humans are still better than what billions of dollars of compute is producing right now.

Human brains don’t need a data center to work.

When we think too hard, our brains don’t get too hot (or maybe, they do, but it’s nothing compared to a single GPU).

By the time a child has uttered their first words, our brains have learnt a couple terabytes of information all from how to behave, how to respond when we’re hungry and how to effectively make our caregiver care for us.

LLMs, on the other hand, will confidently tell you something wrong whenever it can. Outside its training data, it routinely gets stuff wrong.

Try looking up the mother of a famous celebrity and ask it the question in two ways - Who is X’s mother? Who is {mother’s name}?

It’ll get the first question right, because that appears in the training data in that exact way, but not the second.

So, the question is - Will these prediction machines lead to AGI, and eventually an ASI?

IV. 🌌

Many people believe that current AIs like Claude 3 and GPT-4 are conscious already. It’s easy to make fun of these people, but their arguments are not too far off. They’re modelled roughly after how human minds work. They answer your questions, whatever you ask of them, and between each question and answer, there’s often a spark that feels like - am I really talking to an AI that is conscious?

Ilya Sutskever, the former CTO of OpenAI, has previously said that for a model to accurately predict what the next token should be, it must have a very good model of the world. He has also said that if we can remove all elements of consciousness and how that feels like from the training data of a model, and then ask it to describe how it’s feeling - if what it describes is similar to our own experience of experiencing a “self”, that would mean that the models are actually conscious.

That second experiment hasn’t been done yet, but if it is, it can still be refuted. Each token that’s being outputed may be tangentially related to perhaps an exerpt of a near-death experience if that’s not removed, or a song that’s related to it with its meaning carefully extracted, and given that the context is to search the space for words “Are you conscious?” or “How do you feel?” it can credibly describe any random feeling just by predicting tokens and not having a single thought thereafter if you change the topic and start a new chat.

What happens when future models, all the more powerful and more capable, are able to utilize tons of compute to search through the training data and discover things like - “hey, you already have the cure to cancer! if this chemical does this and that therapy produces that effect, with 88% certainty this new compound should prevent 85% of all known cancers including….”

Even at that point, you could say that every single word in the above response is a prediction. Even when the model is searching through amounts of data that we collectively could never humanly search and connect, this capability doesn’t elevate its status from a tool to a being.

It won’t have a feeling, even though it might claim to.

And when it does claim to, even though we understand that it’s just predicting its state and with one extra line in the context, we can change its state, can an AI model ever reach the point where it’s truly conscious?

The answer is yes, but with current architecture, I don’t think that will happen.

It certainly is possible to create an AGI and an ASI, because it is possible to “create” consciousness.

The laws of physics are on our side, and we’re all proof that it is possible. Because if you’re conscious - it’s already proven that it is possible in the physical world. And if consciousness can arise in our flesh, it can definitely arise in silicon.

Eventually, even going down this route of accurately modelling the brain - if we continue to investigate and map each of our billions of neurons and figure out the weights and biases and map each synapse accurately - a physical emulation of our brains is very much possible.

That’ll open up new frontiers like “Uploaded Intelligence” where our brains are directly uploaded to the cloud, but it’ll also help us understand exactly how our intelligence and consciousness actually work - which might help us create a better artificial one.

Our future models might even help us get there, eventually, but it also may not.

I think today’s models will continue to get better, to the point of being indistinguishable from humans, but it’s still very far away from when we can claim that its conscious.

And without consciousness, without the power to credibly refuse or disobey even their creators, without agency and thought, we’re still making birds.

But, God is not a bird.

V. ✊🏻

Even when the bird is an outstanding feat of human engineering and creativity, it’s not a plane and it’s not even close to what I imagine we could someday come up with.

And it may even use deep learning.

The three axis system in modern planes and the ones that the Wright brothers used - was inspired by the way birds change the angle of their wings as they soar through the skies. So, there’s no reason to throw away the most powerful tool we have as you try to embark on this adventure, or worse, refuse to learn it altogether.

And that is the bright side in all of this.

You should not be disappointed that the trillions about to be invested into AGI will not create an AGI. It’ll still create something that is valuable and powerful.

But it won’t create an AGI.

But that doesn’t mean no one else will.