The Problem of Intelligence Inequality

Navigating the Critical Years Before AGI

Ask yourself this question: What will you be doing the day before AGI arrives?

Not the day of - that's too easy to imagine. You’ve seen all the movies and read all the creative fiction - cures to all diseases, no jobs left to do, new scientific breakthroughs.

But what about the day before?

The month before?

The years before?

Doomers are focused on post-AGI implications and writing creative fiction about how ASI will end the world. Optimists are mostly focused on countering doomer narratives, and that is exhausting enough, which means even optimists aren’t considering what happens in this transitionary period.

We're living through the most critical transition period in human history - the years between today's narrow AI and tomorrow's AGI - and almost no one is talking about how to navigate it. No one is clearly telling you what to do, or what they’re doing, or how to make the most of it, or at least shield yourself from the very apparent downsides.

This silence is dangerous because it's creating a growing divide that most people can't see. This is why I think this essay is important.

It’s an honest exploration of what you can do, personally, and as we explore this line of thought, we’ll be able to extrapolate the progress of AI more accurately, and with that, maximize our potential within it.

Right now, some are already using AI to become a better version of themselves and learning or doing the right things as the rules of the new world is being forged, while others either haven’t used any LLM or dismiss it outright for being stupid. This gap - a gap that I call “The Intelligence Inequality Gap” - is widening right now, and it's accelerating.

The truth is, we're not all going to wake up one morning to find that AI has suddenly changed everything.

Instead, it's already changing everything, incrementally, day by day. The real question isn't whether you'll be better off in an AGI world - you probably will be, just like you're better off today than medieval kings were.

The question is: will you be one of the people who saw it coming and prepared, or one of those who waited until it was obvious?

II.

One of the reasons why OpenAI started as a non-profit was because they believed that when AGI is born, all value will accrue to the company who builds AGI. This means that the vast majority of the capital would go to one company - which is why being a non-profit is not just a generous thing to be, it’s sort of the only option if you want to effectively sell the idea to potential hires.

I don’t think that the plan to build AGI has changed too much, and even though it’s not a non profit right now - the broader implications remain the same. Whether all capital concentrates with the winner of the AGI race - there will be many winners while we get there.

If you assume that AI is the most the transformative technology of our time, there are two clear ways of positioning yourself to make the most of it.

The first is to build something with AI. This can be an AI wrapper, which is how billion dollar companies like Perplexity were famously started, and the second way is to be an AI researcher. I know this sounds very reductive, but allow me to explain -

An “AI wrapper” is a product that is just a frontend which is using LLM APIs for their main functionality. A year or two ago, the term was used dismissively but as things became clear that training your model was highly expensive and competing with the big labs wasn’t the brightest idea, it was obvious that AI wrappers were going to stay.

Now, almost every app is an AI app. Most aren’t doing something radical around it - maybe it’s just better search, or more personalized recommendations or a customer support agent router, but AI is clearly being used everywhere.

AI agents are slowly starting to take off where an AI doesn’t just answer your question, but takes actions on your behalf. In both the chatbot style of apps, and current agentic apps, there are drawbacks.

Current models are very smart, but they still hallucinate. They still aren’t consistent. Agentic loops would require dramatically lower latency. Agentic loops cannot afford to have inconsistencies if you want to guarantee some desired outcomes. All these drawbacks are quickly getting solved as the models get smarter.

So, if you discover that you too can build something using AI, something that solves an obvious problem, the market will reward you. The market is already rewarding hundreds of app developers and companies already attempting to do the same.

Venture funds are still pouring in. People are making hundreds of thousands of dollars building apps for various niches.

This is happening right now.

X is filled with stories of developers building and launching AI apps which are doing quite well. Startups are getting funded to do everything from replacing lawyers to replacing customer support agents.

So, building something with AI is one of the highest leverage things you can do during this time. As model capabilities improve, your app should become more useful and as model costs get lower, your profits will increase. Every app developer who built an app wrapped around GPT-3.5 must have seen their costs reduce 100 times in less than two years, which is quite a good deal for anyone who got in early.

III.

The second thing you can do to position yourself correctly, is to be a great AI researcher. I’m saying this because I believe that the AI researcher may just be the most valuable profession in the next 20 years. Unless we get AGI and the AGI is truly capable of researching better ways to build better AGI models, human AI researchers will be the hottest profession.

It’ll even be the most meaningful of professions as you’ll directly be responsible for bringing to life a new life form, one that improves every facet of human life.

If current trends hold, there will be no shortage of demand for AI researchers. If you work hard and truly excel in your field, the future is bright for every AI researcher.

There are drawbacks to this career compared to the career of the AI engineer. An engineer gets feedback from the markets, and can directly think of applying AI to solve problems immediately.

An AI researcher has to primarily follow the route of an academic, if they haven’t done anything spectacular in their youth. This means your feedback cycles will be slower, you’ll have to learn to navigate politics, compete for compute and grants, and if we assume that the number of AI researchers in the field will continue to increase, a vast majority of your day may end up with you just reading through dozens of research papers and making sense of them.

For many, that’s the dream. Nothing’s more fulfilling than reading something, implementing it in PyTorch or reading discussions about it, tweaking it and either coming up with something new or learning something new that would help in the future. For many, even reading the description of the above sounds like slow torture and you’d rather get brutal but quick feedback from the free markets and continue to build great products.

Thus, the AI entrepreneur and AI researcher are great options of things to be in this transitionary period. Any other career that is close to these careers like working at an AI startup, working at an AI lab, being an AI teacher, writing about AI, making videos about AI, etc, are all worthwhile areas but you should be striving to maximize your potential if you have big ambitions and want to inflict real positive change in the world.

If nothing else, if you’re not at least talking to AI, you’re doing yourself a big disservice. I don’t care how niche your knowledge work, if it’s knowledge work, there’s probably some place where AI can help you.

It’s up to you to figure out how. If an AI consistently hallucinates when you ask it to summarize something - try asking it something else. Try to figure out how it’s uniquely useful to you now, just so you build up the practice of asking the right questions. When models are smarter, they’ll be able to give you the correct response, but if you turn to look the other way now, someone else who isn’t - will outcompete you when they keep augmenting themselves with AI to be more productive.

The sad thing about our current scenario is that most people aren’t just not taking advantage of AI by building something with it or researching about it, they aren’t even talking to it.

That’s why we have an intelligence inequality gap.

IV.

ChatGPT currently has 300 million users. Even if we assume that twice that many users are on Google and Bing using Gemini and Copilot, it still means than that less than a billion people have used or even know about how to use AI.

This means that for the vast majority of the world, people haven’t yet experienced the value of AI. Maybe they’ve seen a better answer on Google because of google’s AI search results. Maybe some have interacted with a chatbot in passing, but other than a minor 5% to 10%, most still haven’t discovered it.

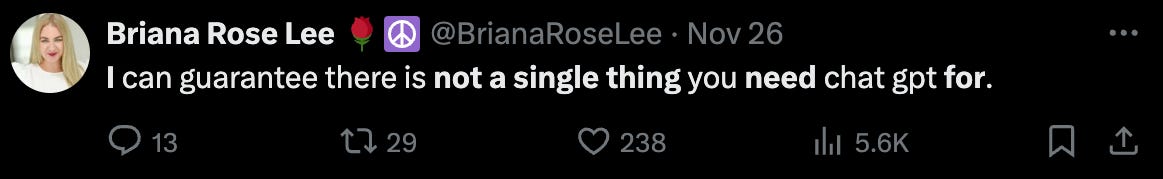

Among those who do know about it but refuse to use it, are people like this -

The above tweet has 400 thousand likes. Maybe the user tried using ChatGPT when it had GPT 3.5 and it performed so poorly that they realized it wasn’t good for anything else and never returned back to take a second look.

But it’s likely that most people who post things like these are playing a status game, as signalling that AI is useless implicitly also signals that what you do is too complex or intricate that no AI can do it.

Finally, there’s a real problem where even those who are using AI don’t really have anything to do that makes use of them effectively. If you’ve played around with o1-preview, and you’re reading this, I’ll assume that you’re reasonably intelligent enough to give problems to the o1 models which are worth thinking about for more than 2 seconds.

However, if you look at the discourse properly, the vast majority of people are not. There are posts everywhere about how o1 doesn’t think before giving their answers and users genuinely feel ripped off because it’s not that much different from GPT-4o, but since you already understand why o1 is not thinking about the answers for them, ask yourself this - What will happen when more advanced models are released?

This means two things will happen, and I’m fairly certain about these predictions-

1) AI progress will continue to accelerate and the models will keep getting smarter, but the vast majority of the world wouldn’t be able to take advantage of it (at least to the extent that some people will do.)

2) The benefits of AI progress won’t be visible, at scale, suddenly.

This means that models will keep getting smarter, and for everyone that uses something that takes advantage of AI - things will just seem incrementally better. Someone might call a customer support agent and be delighted by their response and how they calmly handled their anger and solved their problem, never really knowing that they were interacting with an AI. A drug may come out that treats a deadly disease, and the fact that AI suggested the idea, or found the correct molecule combinations, or led the team that conducted the trials, might just be mentioned in passing.

So, instead of one month where our quality of life improves exponentially, it might just be incremental improvements every weeks, new companies every week, new breakthroughs every month, new treatments every month, and so on.

The vast majority of people won’t notice this happening. If I ask you to tell me the 5 most popular news stories you know that happened this week - you will struggle to tell me anything positive, because media (and social media) thrives on amplifying the problems more than celebrating the solutions.

Similarly, people not knowing about how to effectively take advantage of LLMs won’t be left behind outright, but when their jobs are automated enough or they are outcompeted by someone effectively using AI, they’ll notice.

I’m writing this essay at a time when OpenAI has announced that they will have 12 new updates for the next 12 days. How will the world look a month from tomorrow? More of the same, with only a few people actually taking advantage of the increases in intelligence.

V.

The intelligence inequality gap seems like it’s something that won’t grow over time. If it was up to me, LLMs should be something every 5 year old starts to answer all their questions about the world, but that’s a future that will take time to come.

When I was younger, alongside Wikipedia, there was a similar site at the time called Britannica Encyclopedia, which I discovered first before wikipedia. I was probably 9 or 10 at the time, but I remember scribbling the url of the site on my bedroom wall so that I don’t forget about it. I’m sure that current kids finding out about LLMs will feel the same excitement that I felt, except this time, if they’re taught to effectively use it to learn, they can actually end up learning everything there is in a fraction of the time that it takes their peers to do.

The education system, as slow as they are, will take time to understand the benefits of AI and properly include a variant of them in their curriculum. If you have kids, you shouldn’t wait for the system to catch up and just teach your kids how to use an Ai effectively yourself.

If you’re still not using AI for anything substantial, and what you do is “knowledge” work, I can guarantee that you’re leaving gains on the table. Don’t listen to the pessimists, skeptics, virtue signalers and doomers, all of whom say stuff like either -

AI is not good enough yet,

- AI is so good that it’ll kill us all.

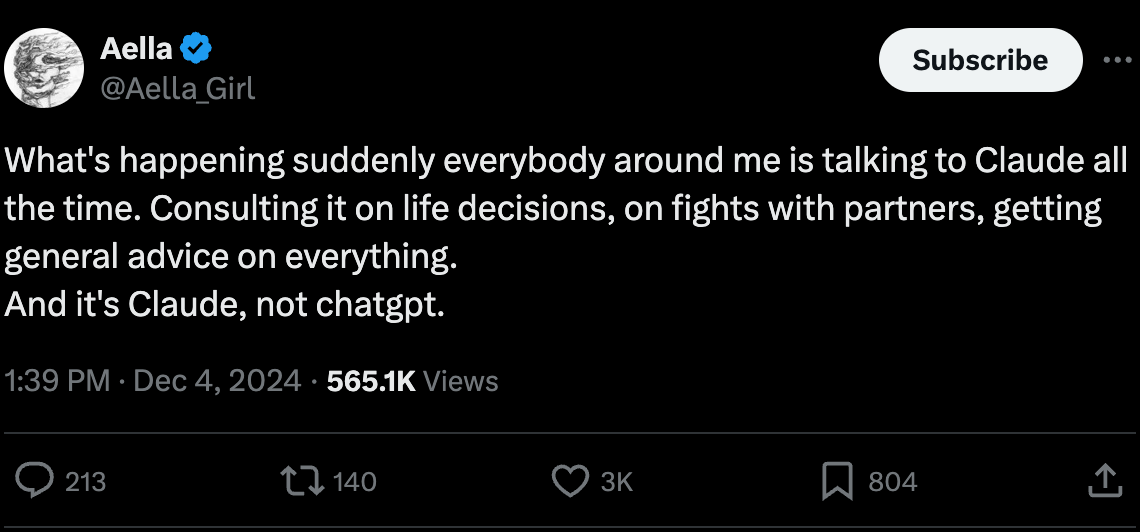

Both those things are wrong. AI is certainly not good enough to do your whole job (yet), but you’ll be surprised at what it can do. If you’re just looking for an AI to talk to, I recommend Claude since it’s better at conversations, or use my custom GPT - Samantha. There are already people talking to Claude everyday -

If you’re in a field that requires solving problems, I recommend GPT-4o and o1-preview. If you can’t get o1-preview to think for more than 20 seconds, I want you to start working on harder problems, because that’s what will separate you from everyone else.

There’s a counter argument to this entire essay where one says that the field they’re working in will truly be untouched by AI. I think that is also true. I also think that if you’re smart enough to work on something that is not very important right now, but has the potential to be very important in the future, you should continue ignoring AI and just work on that. This includes things like quantum computers, longevity research, nuclear fusion, and a whole host of other fields that I may not know about.

But most people are not only working on something like that, most don’t even know just how far ahead of the curve you can get with AI.

Intelligence inequality is a problem, and while this essay may serve to convince those reading it, it can’t AI-pill the whole world.

That will take time. But time is running out.

The gap is growing. The clock is ticking.

The question isn't whether to adapt - it’s where you could be once you do.