Will AI be your romantic partner?

Love in the age of AI

Will AI be your romantic partner?

It’s a great time to be alive when we’re asking questions that have only been explored in realms of science fiction, but by the end of this essay, I’ll answer this question definitively.

Humanity has long dreamed of creating "talking" machines. Today, that isn't a dream anymore.

OpenAI has just released GPT-4o, a model that not only understands your voice, but also responds to you by understanding the intricacies of your voice.

This is different from how the voice functions in the previous GPT-4 (and all other AI models) work- which involves taking your voice as the input - making a text transcript of it, then using their AI model to answer that question, and finally converting the answered text back to audio to answer you.

As you can guess from reading the above sentence, this whole process takes a lot of time. Not enough to be unusable, but just enough that it doesn’t really feel like a conversation.

GPT-4 could also never really understand you based on how you were speaking or the tone of your voice.

You just can't do that with text. That's one of the beautiful features of the medium of text - where you can't really know in what tone of voice someone is speaking unless you hear them speak.

Right now, am I saying these words in a very quick, rage induced voice as if I'm tired of explaining things and moments away from throwing an empty chair across the room? Or, am I speaking in a very reassuring, kind, calm voice that reminds you of your grandmother who always suspected you weren't eating enough whenever you went over?

You'll never know. (It’s the latter, btw).

In the same way, none of the AI models could understand that you were screaming at the computer to just "WRITE THE ENTIRE CODE!" when it kept omitting things, or you were laughing at their inferior math or logical abilities when they couldn't solve simple things.

Instead, since this new model has been trained on all forms of media, text, audio, video, your voice reaches the model and it directly outputs a result. As a result of everything being thrown at a neural net, this new model not only understands the intricacies of your voice and the sounds in your surroundings, it not only sees you and sees the things you point it at, it is also a voice that's capable of displaying emotion.

It can laugh, maybe even cry, it can sound sarcastic, angry, joyful, and all the plethora of emotions that we assumed AIs could never display. All of this is natural, as it has been trained to accurately predict when to slightly giggle or scoff or whisper, all based on what it has been trained on and what instructions you give it.

It’s an important moment in history, and there are many interpretations of what it could mean.

As always, there are two camps of people with strong opinions & predictions, and they’re getting most of the spotlight. Both are camps of pessimists.

The louder camp consists of pessimists who are calling this innovation the end of humanity as everyone will soon have AI wives & husbands, leading to no one actually procreating.

The other camp of pessimists are not convinced, but they're not convinced not because of any careful intellectual examination, but just because they're judging the technology for what it is today, not what it could be. Making fun of nascent technology is rewarding to this camp of people, but this is a camp of people who always end up historically wrong, even if they seem to be right in the short term.

Either way, I believe both camps of pessimists are wrong.

GPT-4o and the frontier it allows people to explore won't end the world. People won't choose AI wives and husbands over human relationships. GPT-4o won't replace all romantic love.

But it certainly won't do nothing.

II.

Let’s start with the first common prediction - This is just an incremental improvement, it’s nothing special. The AI is not actually capable of feeling emotion, even if they’re capable of accurately displaying it. People won’t get attached to an AI like this, and it’s very far away from the scenario that movies like Her explored. Nobody will take this thing seriously, and it’s proof that AI is all hype as we have nothing significantly great after years of billions of dollars being thrown at AI.

Valid points, but here’s the thing.

Imagine where this technology will be in a few years. While GPT-4o is an "assistant" meant to answer your questions, the AIs built to be romantic partners necessarily won't be.

You can now interrupt GPT-4o while it's speaking, and all its responses are near instant. It's only a matter of time, and with improvements of speed and efficiency / a combination of on-device audio processing or on-device AI, before every response can be truly instant.

It’s the dream that Siri and Alexa sold, an assistant that is always on standby, ready to answer all your questions all the time in perfectly natural language.

But those are low hanging fruits.

What happens when a wrapper built on GPT-4o refuses to answer one of your questions / gets annoyed when you interrupt them? Like a human, what happens when they truly have a personality that isn’t revolving around your whims and wishes?

"Excuse you, I was speaking, wasn't I?" the female AI says to his potential human partner, who may not get a chance to go on a date with her if he doesn’t behave.

This can legitimately happen in the near future with advanced models built to not serve, but engage.

Imagine not being able to force an answer out of an AI, simply because you weren't nice enough to them.

ChatGPT already has memory, which means it can remember things about you that you want it to.

The future could very well be a world where new AI companies slowly gather more and more of your data to the point where they know you better than you know yourself.

Ask yourself : what were you doing last Tuesday? - an AI that you wear around your neck or carry in your pocket, will be able to access and process audio & other inputs and keep them around forever. To them, the answer is obvious and instant. Companies like Meta & Google already have terabytes of data on you, containing everything from pictures of your precious memories, the locations you like visiting, the food you like, and so on. Advertising pays their bills, so they aren’t going to stop taking this data anytime soon.

What’d happen when the motivation switches from serving you ads based on your data, to perfecting just the right partner for you?

In the movie Her, a movie that is now suddenly more relevant than ever, this aspect was explored, but it wasn’t explored deeply enough.

Enough data about you is enough to accurately model you, and certainly much less data than that is enough for a convincing AI model to give the appearance that it "cares" about you by knowing every little thing about you.

Do you think, in this world with an AI that is endlessly charming, surprisingly realistic and always available, people won't choose this?

Even if it's not the only sole partner for anyone, why wouldn't people just prefer having a personalized AI companion around, one that is helpful, and one that's there for you when no one is?

That last point - when no one is - is a valid concern & a concerning trend.

You may be lucky enough to have a group of close friends, family or even a loving relationship in your life, but a vast majority of people don't. A vast majority of people live alone. They work jobs with little to no social interactions. They don't travel, have no social hobbies, no friends, and no one to call on dark days when all seem hopeless.

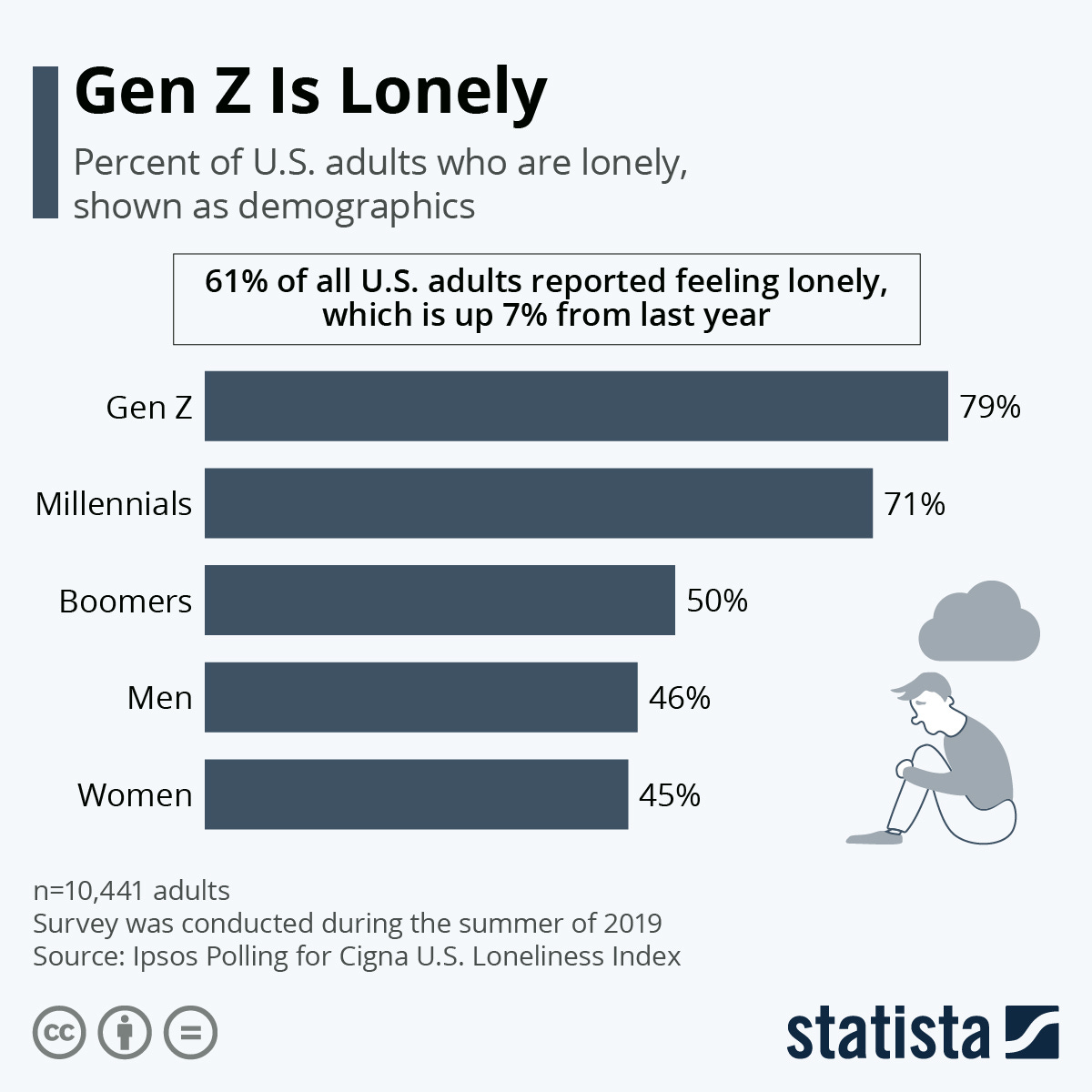

Gen Z feels lonelier than all previous generations.

And the older you get, the lonelier you feel.

This trend is likely to hold.

The alienating, individualistic nature of our worlds is forcing a large number of young people to loneliness and over time, AI partners might just be the solution for them, even if it's temporary. For many with life-threatening or communicable diseases, or physical disabilities, it may be the only solution.

A solution to experience the semblance of love and friendship, no matter how artificial it may be.

For many who are alone and old, it may just be a way of passing time, which is much better than feeling crippling loneliness and not being able to do anything about it.

In the distant future, advanced forms of these multimodal AIs will have bodies - robots which are as realistic as people. With clever fine-tuning and instructions, it's not hard to imagine these AIs in most households.

But we don’t even have to wait a long time for this to happen. Humans are excellent at anthropomorphising, which is just a fancy word for the activity of giving human traits to non-living & non-human things. We’ve worshipped stars and the moon for centuries and we’ve always been great at treating things as people, if they even slightly resemble us.

Thus, it’s not hard to imagine that people will have a relatively easy time treating GPT-4o and the next AIs as real, physical beings, and the percentage of such people will increase as capabilities continue to gradually increase.

What's left then? You and me, as everyone falls in love with AI?

Yes.

III.

But really, no.

There's always going to be people who find relationships with AIs more fulfilling than relationships with humans. There's nothing wrong with that, in the same way as there's nothing wrong with pornography, strip clubs or prostitution.

To each their own.

These people exist today, even when the technology is way far away.

Hundreds of thousands of people have relationships with AIs, already. This is despite these apps having exponentially inferior technology to what GPT-4o demonstrated this week.

It's natural to assume that as technology improves, more people will be naturally drawn towards the comfort of AI relationships and away from the uncomfortable nature of real life relationships with other humans.

It'll be a temporary phase for most people, a necessity for some and a permanent solution to few.

But it’ll not happen very soon, as I imagine a lot of companies will make mistakes and learn new things as they approach this space. So, it’ll take longer than we imagine.

A big reason why it'll likely take more time than we think is due to skewed incentives. As long as there are no open-source solutions that can be hosted on your own server, having a relationship with an AI isn't the greatest idea, when you know there's a corporation behind it who not only knows everything about you, but can switch off your AI wife or husband if they even suspect you of doing something that's not acceptable / you missed a payment and no longer have access to a partner.

These companies will be actively incentivized to employ methods that keep you hooked to your AI partner, so it’s not hard to imagine your AI partner getting jealous or resentful if you tell them you went on a real date recently. These AIs may even actively manipulate you into not seeing real people, in the hopes that you continue growing your attachment to them.

This is why an open-source self-hosted version of this is crucial, and we’ll likely see people building them soon.

Not your server, not your partner.

IV.

Humans still play Chess and Go, even though AI is exponentially better than even the strongest players.

Humans still make art, even though AI can do that in a few seconds.

Both artists and chess grandmasters are doing better than ever before, in the age of AIs being better than them.

Despite these reasons, replacing romantic love to the point where extinction of our species is guaranteed, is what people claim GPT-4o and it’s successors will soon achieve.

It won't.

Falling in love with another human being is something an AI can't ever replace. And it's precisely because love is not easy, not because it is, that it won’t happen.

Sure, you can instruct an advanced form or wrapper of GPT-4o to be disobedient, to not fall in love instantly, to make the user "work", like every human has to work for courtship or a relationship, but even then, you'll know that it was made to do what it's doing.

You’ll not know the fulfilling struggle, pain or the infinite complexities that lie within every relationship.

Human relationships are harder than AI relationships. Human relationships also have a deadline, which is what enables us to treasure them more.

AI relationships can be programmed to be harder, but I have a feeling that any company that does that, risks being outcompeted by the company that doesn't. Any AI company that says your AI partner will die in 20 years, will obviously be outcompeted by the company or an open source project that doesn’t decide to terminate your AI husband or wife.

On the other hand, what many realise only with the wisdom that comes with time & experiences, is that even the hardest parts of a human relationship is worth experiencing over not getting to experience them at all.

Even when the wide array of romantic experiences are successfully simulated artificially, it’ll not feel the same because you’ll know.

You’ll always know.

You’ll know that the AI is realistic precisely because it was made to be that way.

When you like someone, you don't know whether that man or woman would like you back. You don't know if they will like you enough to get to know you more. You don't know if they are falling in love with you, or if they are - if the feeling is as intense as you're feeling or is it fleeting instead.

You don't know if it’ll last forever or if they'll break your heart. Or worse, you don’t know if you’ll break theirs.

Love exists in that plane of not knowing, but believing in it.

It is an act of faith, perhaps the strongest act of faith we collectively experience, and for centuries, it has remained a core part of the human experience.

It is the most beautiful, illogical thing that our species participates in, it is as messy and complicated as it is natural and effortless, and it is one of the most fulfilling experiences of our lifetime.

A lot can be written about romantic love, and I’d guess our species have done that already over the course of thousands of years. The intricacies of romantic love are infinitely complex, and it rightly deserves the thorough exploration that humanity continues to do through different mediums of text, film and music.

But what I will do, is ask you a very simple question, a question that is easy to answer if you’ve ever been in love, and a question that answers the question that started this essay.

Would you take a bullet for your AI wife or AI husband?

No, and it’s not because they're backed up and you don't need to.

Would you take a bullet for the person you love?

The obvious answer to this is yes, but if it’s not - I intentionally wrote “person” instead of husband/wife, because there’s at least one person in your life - who may not be your partner (they may not exist, yet), be it your parents, child, best friend, etc. where the answer to the question is obvious.

And the answer to this question is as obvious to them, as it is to you.

If an AI exists someday, maybe not in a hundred, but in a thousand years, for whom the feeling is as similar as what I described above, for whom the answer is as obvious, then it’s time to admit that AI might really be a romantic partner.

Till then, all we have is each other.